A Tourist's Guide to Local LLMs

From magic tricks to mundane utility

Running LLMs at home in 2025 is a bit like owning a personal computer in 1977 — hard to justify on practical grounds alone. And yet, watching my own laptop perform the same magic tricks that ChatGPT once dazzled us with — it feels like glimpsing a postcard from the future.

This outline is a starting point for anyone curious about local AI. The goal is to get you up and running using the hardware you already own, with no coding required.

Hardware

LLMs are memory-hungry beasts, making RAM the biggest hardware constraint. The more memory you have, the larger (that is, smarter) models you'll be able to run.

Macs with M-series processors are uniquely well-suited due to their unified memory architecture. If you bought one within the last couple years, you're in good shape.

On Windows or Linux, any mid-range gaming laptop with a discrete GPU should suffice, while a high-end gaming desktop is more than capable.

Software

Choose your champion:

- Jan: Simple, clean, and open source. My top pick for most.

- LMStudio: Also simple and clean, but not open source.

- FlowDown: Mac-specific native app that consumes fewer system resources.

User experience can still be a bit uneven, but these three do a good job of streamlining the download process and providing a familiar chat interface.

Models

There are a dizzying number of open weights models to choose from. I'll give specific model recommendations later, but it helps to understand a few key concepts:

- Parameters refers to the size of the model, typically rounded to the billion. So a "32B" is significantly larger than a "8B". Larger generally means smarter.

- Multimodal means the model can accept images (and sometimes other file types) as input, in addition to text.

- Reasoning means the model outputs an "internal monologue" before answering, which can improve logic, but increases processing time.

- Quantization is essentially the "compression" of the model. Like images and MP3s, there's a tradeoff between file size and quality. Both Jan and LMStudio do a good job of estimating whether your hardware can run a particular model, so you shouldn't have to make this decision yourself.

Top Attractions

While LLMs running on consumer hardware still trail their cloud-based counterparts in many ways, the historical trend of shrinking supercomputers into consumer devices suggests plenty of improvement ahead.

There's already a growing number of use cases where local AI shows impressive promise and real utility.

Visual Geo-Guessing

My favorite "magic trick" for demonstrating local AI's potential is visual geo-guessing.

In your app of choice, search for Gemma 3 — a multimodal model from Google. It comes in multiple sizes, but download the smallest "4B" version to begin with.

In a new chat, attach a photo — perhaps from your last vacation or taken from the nearest window. Ask it to analyze it for clues and guess where it was taken.

And prepare to be amazed by the level of detail and accuracy that's possible in just a 4GB download.

Cloud Sidekick

For practical, day-to-day use, keep your Jan / LMStudio / FlowDown app open next to your ChatGPT / Claude / Gemini.

This makes it easy to copy every prompt into both. While cloud AIs still clearly have the edge, you might be surprised how often local AI provides a perfectly usable answer. It's a great way to develop an intuition for differences in models, capabilities, and failure modes.

Size matters a lot here — larger models "know" more and are generally better at following instructions. For me, the threshold for general utility is crossed somewhere around 20B+ parameters. Qwen 3 32B from Alibaba, Mistral Small from Mistral, and Gemma 3 all handle themselves admirably.

Writing Assistant

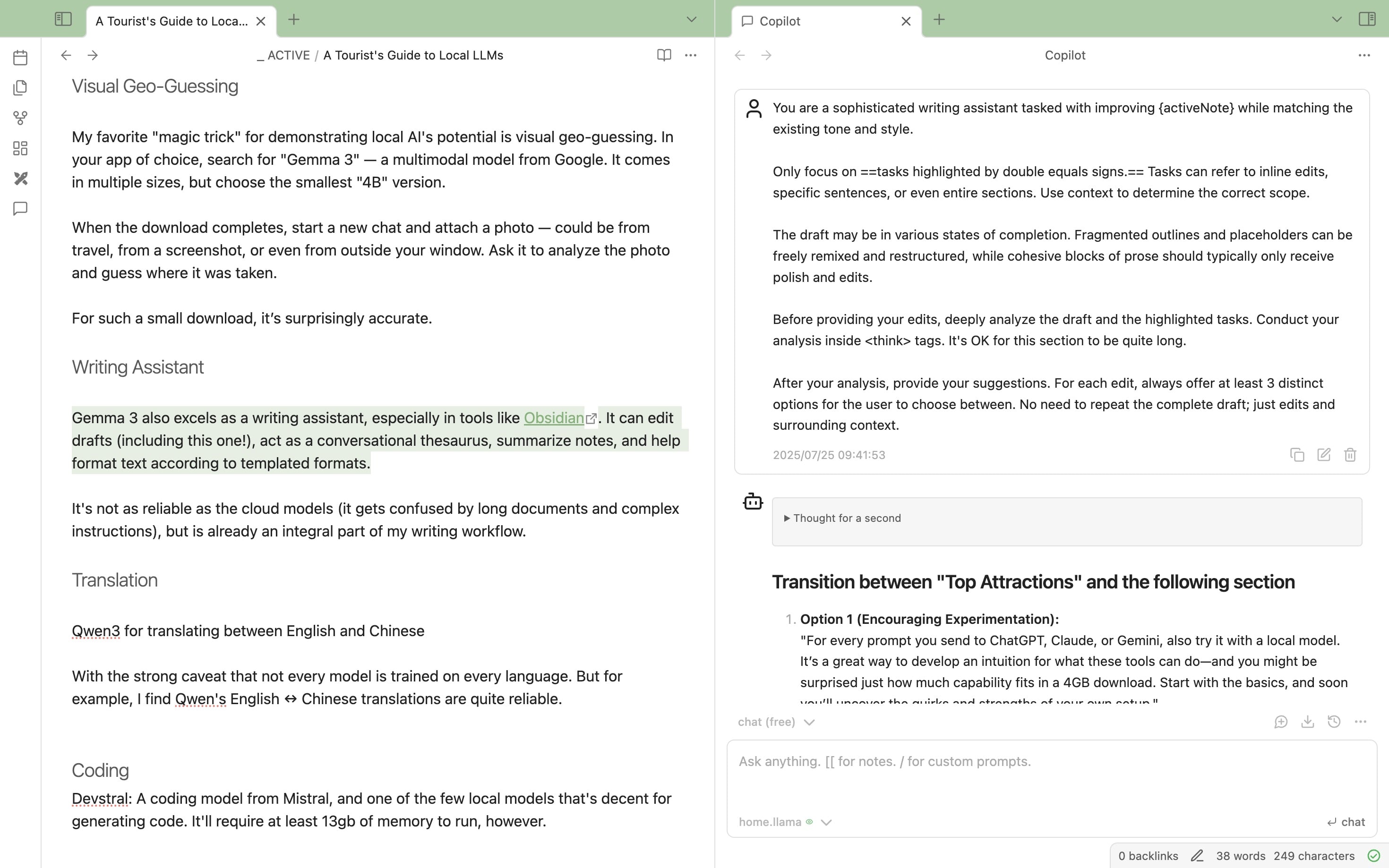

Gemma 3 also excels as a writing assistant — acting as a conversational thesaurus, summarizing notes, and formatting text according to templates. Integrated into Obsidian, I use it to edit drafts like this one!

It's not as reliable as Claude (it gets confused by long documents and complex instructions), but is already an integral part of my writing workflow.

Colloquial Translation

While not all local models support every language, I'm quite happy with English and Chinese translations from Qwen 3.

I now prefer LLMs to dedicated translation apps since they're better at informal phrases, and give me the ability to explore rabbit holes around word choices and entomology.

MCP Experiments

MCP is an advanced feature that lets LLMs call tools like web searches and file operations. Think of it as a very early stage "app store" for adding capabilities to models — still rough and requires technical setup.

It's a fitting final stop on our tour, because it demonstrates the massive scope of what's possible. Local models can already integrate with everything from Airbnb listings to Zoom meetings — even if somewhat unreliably.

And while local capabilities aren't quite ready yet, the vision on this postcard grows clearer with each passing month.